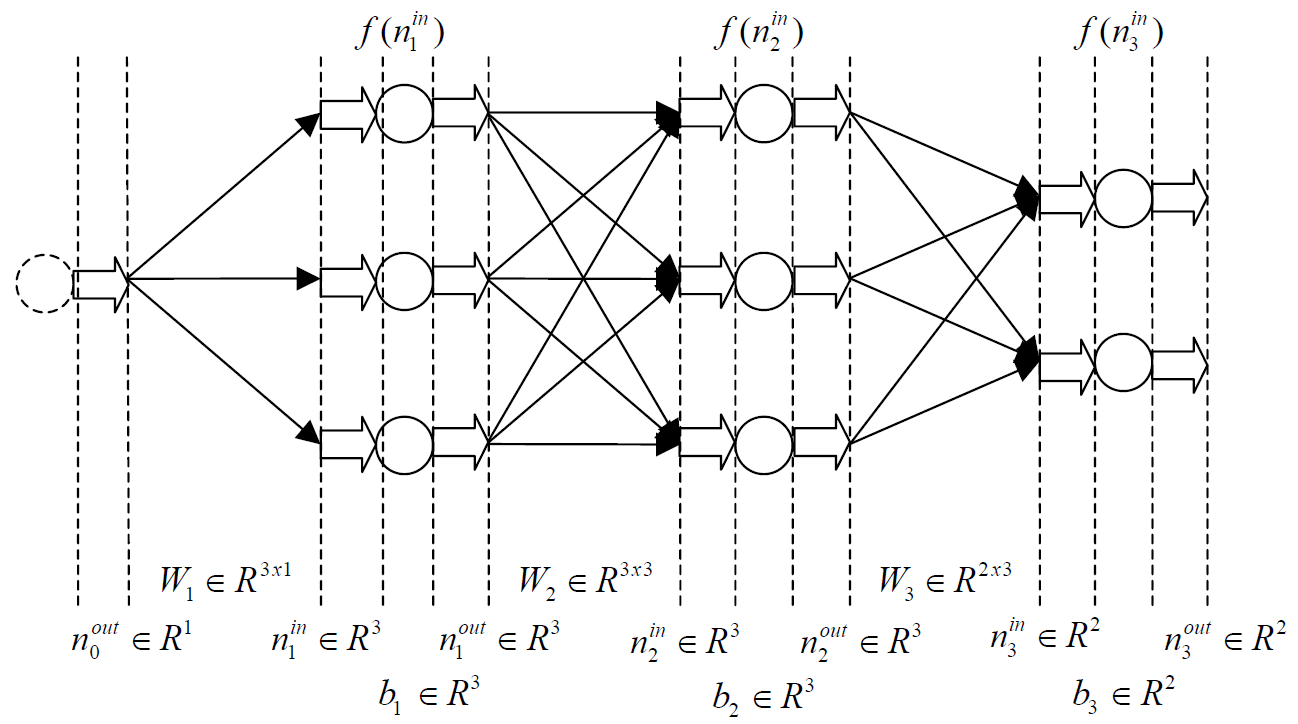

In a multi-layered neural network weights and neural connections can be treated as matrices, the neurons of one layer can form the columns, and the neurons of the other layer can form the rows of the matrix. The figure below shows a network and its parameter matrices.

The meanings of vectors and matrices above:

ninl : the input of the l. layer.

noutl : the output of the l. layer. The input vector of the neural network is nout0, and the output vector is noutL (l=1…L).

bl: the bias (threshold) vector of the l. layer.

Wl: Weight parameter matrix between layers l and (l-1).

noutl=f(ninl): Activation function of the neurons.

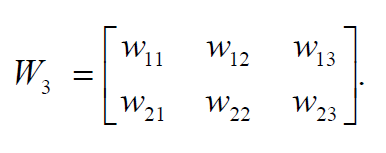

For example the weight matrix of the 3rd layer can be expressed as below:

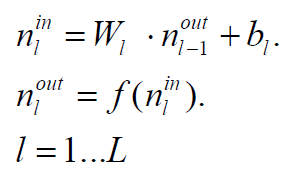

Using matrices for forward propagation:

The backpropagation algorithm:

The vector e means the error of the current layer, and t is the current target vector. After determining the errors on all layers the gradients can be computed in one single forward-propagation step:

The vector e means the error of the current layer, and t is the current target vector. After determining the errors on all layers the gradients can be computed in one single forward-propagation step:

where P means the number of the training patterns. The algorithm above must be executed for all patterns.

where P means the number of the training patterns. The algorithm above must be executed for all patterns.

//