I had been thinking for a while about the most flexible way to add Convolutional layers to my existing API when I read an article about the so-called Deep Residual Learning. Roughly speaking Residual Learning requires a network architecture in which each layer consists of two layers, duplicates of the same amount of neurons, and one of these layers is skipped during forward propagation. I have not implemented it yet so I do not want to go into more details about it right now, I just want to point out that implementing this would have required me adding a whole new algorithm with a whole new network structure to the original architecture. This was the point when I realised that if I make the smallest individual granularity of a network that an algorithm interacts with a layer instead of a whole network of layers then implementing Residual Learning would be much simpler, and it would also provide a solution for adding Convolutional layers.

I have kept the old algorithms at least for now as they are already working very efficiently and they can cover a whole lot of problems. Instead I added my redesign as a separate architecture in a separate DLL.

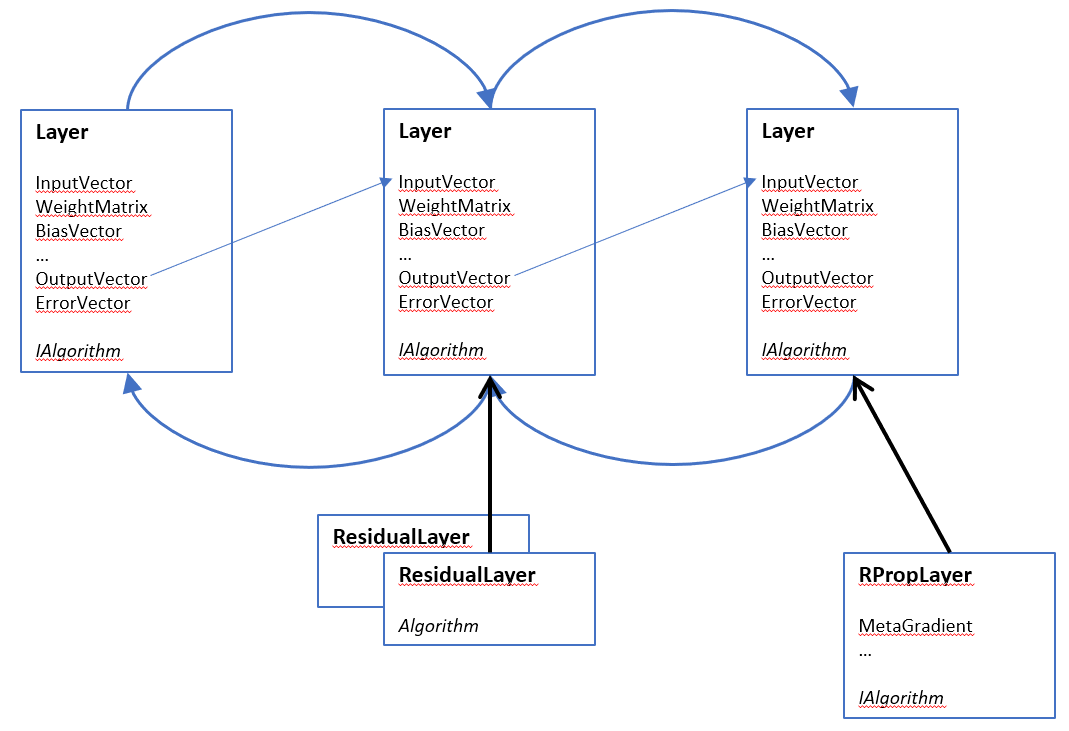

With this architecture each layer connects to other layers through a common interface of inputs and outputs. Also each layer connects to algorithms through an interface, and they have pointers to the previous and the following layer. This way these layers form a double linked list. Each layer type has its own polymorphic implementation that contains the necessary vectors and matrices needed by the algorithm. For example the Resilient Propagation algorithm needs to add MetaGradient and Decision matrices on top of the basic weight and bias matrices and vectors, so this needs a Resilient Propagation Layer inheriting from the original Layer implementation.

This new architecture does not deal with distributed algorithms yet, so only Backpropagation and Resilient Propagation are available, but once I make these working I will also add a general distributed execution that will be able to deal with training data oriented distribution. The code is already available in the repository, however I am just about to start creating wrappers and test applications for it.