Since the last AI Fight article I did not make conscious efforts to improve the performance of the algorithms, I have been busy with updating the existing interfaces/application, and with creating new interfaces. I have rewritten all test harnesses from scratch, and I have added a new WebAPI interface. Also I started experimenting with an actual build in the cloud in the form of an Azure Worker Role application.

Platform

Up until the cloud implementation I kept the main platform in the project configuration 32 bit and I only used a 64 bit platform for the CUDA configuration because Nvidia is not supporting 32 bit code anymore. My point is: I did not have any performance measurements in 64 bit and I assumed that it can’t be any better than the 32 bit code. But then I was forced to create an “AnyCPU” configuration for the cloud for which I had to build all native libraries in 64 bit. I decided to try out the 64 bit version of the Test Applications as well. The performance was surprisingly better than in 32 bit.

Intrinsics

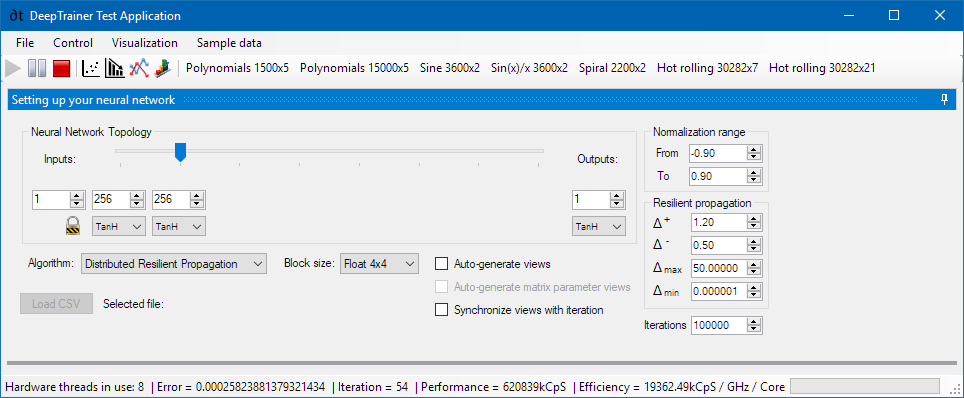

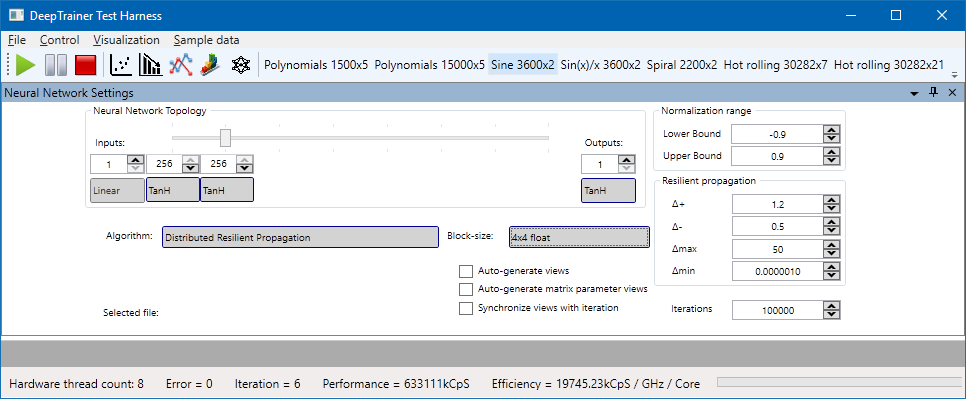

When I reimplemented the test harness applications I also made the block size changeable through the user interface. Now you are able to choose between 4×4 or 8×8 block matrices. Before this change I was using 8×8 block matrices by default, thinking that the more calculations are done using SIMD instructions the faster my algorithm will become. I had to face a big surprise though. When I enabled 4×4 and tried it just to see it is actually working as it should, I immediately noticed a performance increase of around 5-10%. This was almost certainly due to the fact that I was using 1 input and 1 output networks for testing, and when used with 4×4 blocks, the number of inputs were treated as 4, and with 8×8 blocks they were treated as 8. When the first and the last hidden layers contained 256 neurons this resulted in quite a noticeable difference in the weight matrix sizes (8×258 is double the size of 4×256), so I had to find a strategy to eliminate this distortion. I decided to use a 1x8x256x256x8x1 matrix topology, in which the difference from rounding up to 4 or 8 becomes insignificant. The result was that the 8×8 blocks came out as winners, although by only around 1.5%. I would have expected a larger boost from double width SIMD instructions.

I was able to achieve 18800kCpS/GHz/Core with 4×4 blocks, and 19280kCpS/GHz/Core with 8×8 blocks using a 1x8x256x256x8x1 network topology.

Further Measurements

I am including here my measurements with the 1x256x256x1 network topology only, as with less hidden layers my results are still better.

As you can see the new record is 633MCpS at 4.5GHz on 8 hardware threads. This is achieved by 19745 kCpS/GHz/Core efficience, which is much higher than anything I have achieved before, and the increase is mostly due to moving from 32 bit to 64 bit build. Of course I was not displaying any graphs during performance measurements as these would have affected the performance measurements too.

Unfortunately I do not have access to the 40 core Xeon monster workstation I used to make some measurements with before last July, but I am sure that with the current code I am sure I would easily achieve 1.5GCpS performance. This result comes from my interpolation. When I achieved 0.933GCpS on the Xeon machine, I measured 0.318GCpS on my Core-i7. So now my Core-i7 measures 0.633GCpS, with linear interpolation I should expect 1.86GCpS on the Xeon machine. (Let’s be generous, I am already happy if I see 1.5.)