Dynamic Graph Architecture: Enabling Composite Networks

DeepTrainer Technical Update

The evolution of neural network architectures moves rapidly. Recently, the rise of Deep Residual Learning (ResNet) caught my attention. The core concept—introducing “skip connections” where the input of a layer is added to the output of a later layer—presented a challenge for DeepTrainer’s existing architecture.

My original design treated a “Network” as a monolithic block of layers. To implement ResNet, I would have needed to hard-code a new “Residual Network” type. This felt rigid. I realized that to stay adaptable, I needed to change the fundamental granularity of the engine.

From Monolith to Dynamic Graph

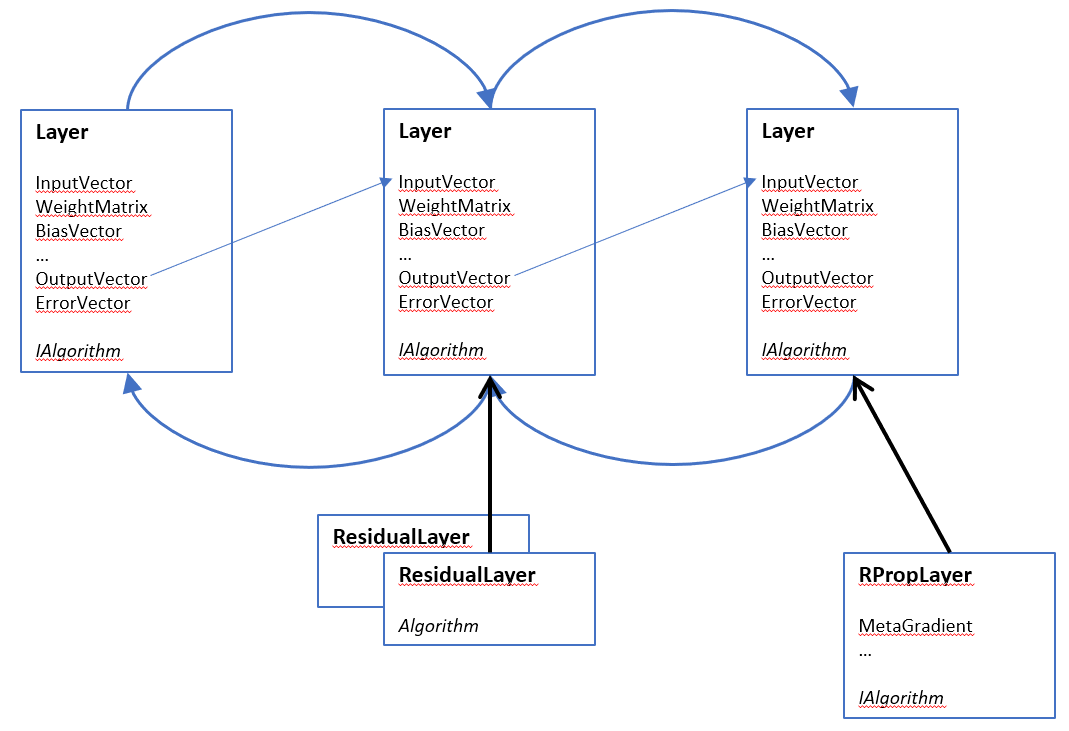

The solution was to refactor the engine so that Algorithms interact with Layers, not Networks.

I have redesigned the core architecture so that the smallest atomic unit of execution is the Layer itself.

- Doubly Linked List: Each layer maintains pointers to its previous and next neighbors.

- Common Interfaces: Layers connect via a standardized interface for input/output vectors.

- Polymorphism: Each specific layer type (Dense, Convolutional, etc.) inherits from a base

Layerclass and implements its own matrix math.

Why This Matters

This “Dynamic Graph” approach allows for arbitrarily complex topologies.

- Composite Networks: We can now build networks that branch and merge.

- ResNet Support: Implementing a residual block is now just a matter of linking a layer’s output

to a summation node further down the chain, without changing the core engine. - Convolutional Ready: This modularity was the final piece of the puzzle needed to integrate

Convolutional Layers cleanly.

I have kept the old algorithms at least for now as they are already working very efficiently and they can cover a whole lot of problems. Instead I added my redesign as a separate architecture in a separate DLL.

Resilient Propagation Support

Currently, this new architecture supports standard Backpropagation and Resilient Propagation (RProp). For RProp, I

implemented a specialized ResilientPropagationLayer that extends the base layer to hold the necessary

MetaGradient and Decision matrices.

The code for this new Dynamic Graph engine resides in a separate DLL for now, ensuring the stability of the legacy algorithms while we build out the wrappers and test harnesses for this next-generation core.

This new architecture does not deal with distributed algorithms yet, so only Backpropagation and Resilient Propagation are available, but once I make these working I will also add a general distributed execution that will be able to deal with training data oriented distribution. The code is already available in the repository, however I am just about to start creating wrappers and test applications for it.